Update (2026): ChatGPT can now analyze uploaded videos

What ChatGPT can do with video ✅

Analyze frames and visuals

You upload:

- a video file

- screenshots or frames from the video

ChatGPT can:

- describe scenes and actions

- detect objects, text, and UI elements

- analyze gestures, layouts, and user flows

- explain what’s happening step by step

Tip: If your goal is deeper review (UX issues, unclear steps, pacing), include a short note like “focus on onboarding friction” or “spot where users might get confused.”

Why UXsniff Takes a Different Approach Than Video Analysis

ChatGPT does a decent job understanding what a video is about and its general context once the video is uploaded. However, video analysis is resource-intensive. Decoding frames, tracking visual changes, and inferring user intent from pixels alone requires significant compute and still involves interpretation gaps when it comes to precise user actions.

UXsniff takes a fundamentally different approach. Instead of storing heavy video files, UXsniff records sessions as structured DOM mutations and interaction events. This allows AI to read and understand every click, scroll, input, and state change directly at the source. Because the data is semantic rather than visual, the AI does not need to guess what happened on screen.

As a result, UXsniff can interpret user behavior with far greater accuracy and consistency. Every interaction is explicit, measurable, and reproducible, enabling the AI to generate deeper and more reliable UX insights from session recordings than video-based analysis alone.

Read more: How UXsniff Analyzes User Behavior from Session Recordings Using AIHow Session Recording Analysis Worked in 2023

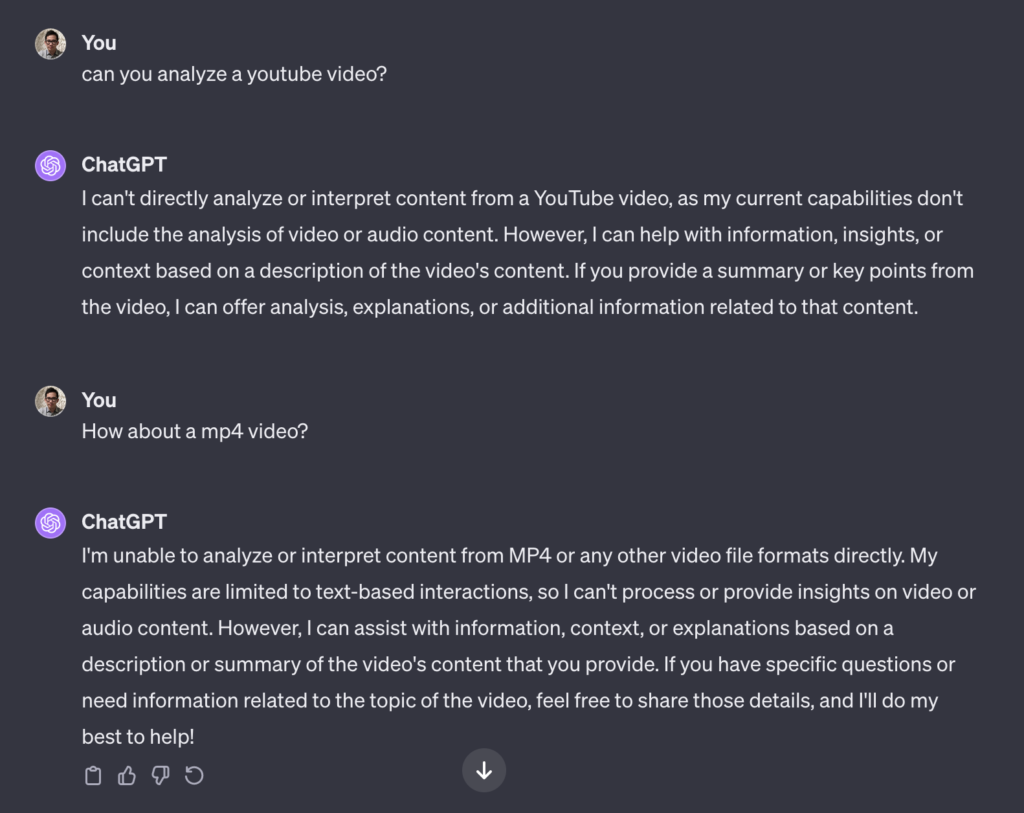

Back in 2023, ChatGPT did not have the ability to analyze video files directly. While it could reason over text, tables, and structured data, video content still required manual review or external tools to interpret. For teams working with session recordings or user behavior data, this meant relying heavily on human analysis to understand what was happening inside each session..

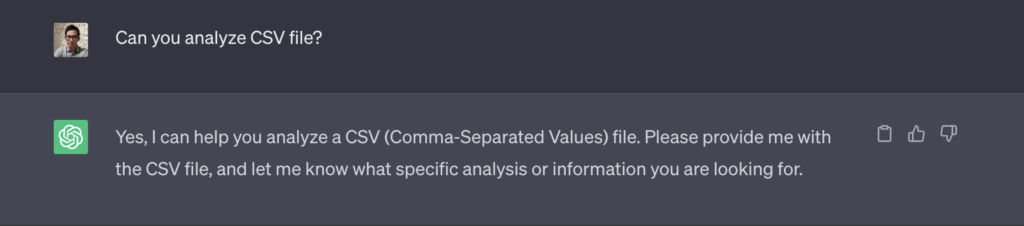

UXsniff’s session recordings are not stored as traditional video files, but as BLOBs (Binary Large Objects) containing raw data. This raw recording data can be conveniently converted into JSON or CSV formats for analysis and use.

In the previous article, we analyzed around 200 videos to gather insights. Now, we are curious if ChatGPT is capable of analyzing session recordings.

Check if chatGPT is able to analyze a CSV file

Next, we’ll export the raw recording data into CSV and paste it here.

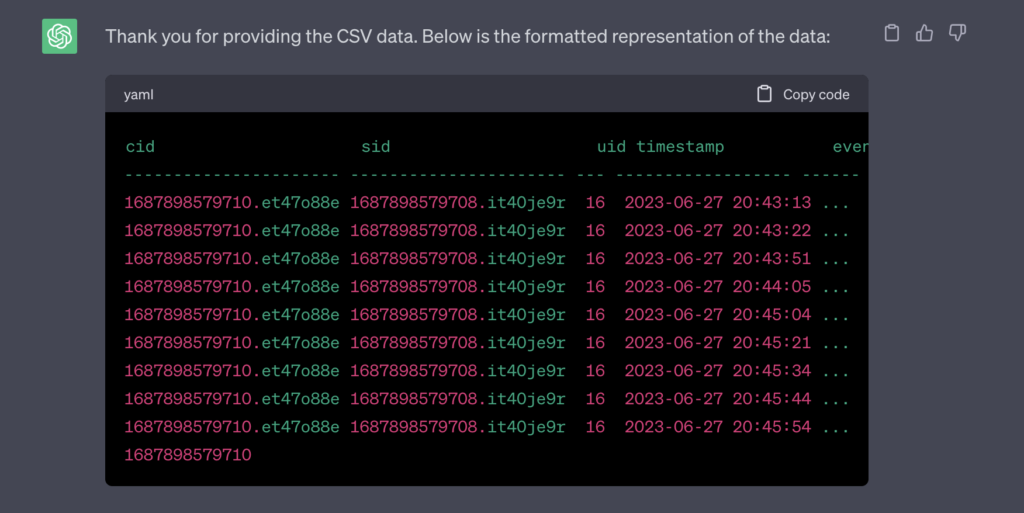

Start querying chatGPT using natural language

Once we have done that, we can ask GPT some questions regarding this session recording. One of the questions we’ll ask is, “Which URL did this user spend the most time on?”

It’s quite impressive that, even without explicitly informing GPT about the specific column that represents the time spent and its unit in milliseconds, GPT accurately reads the correct column and provides the accurate information.

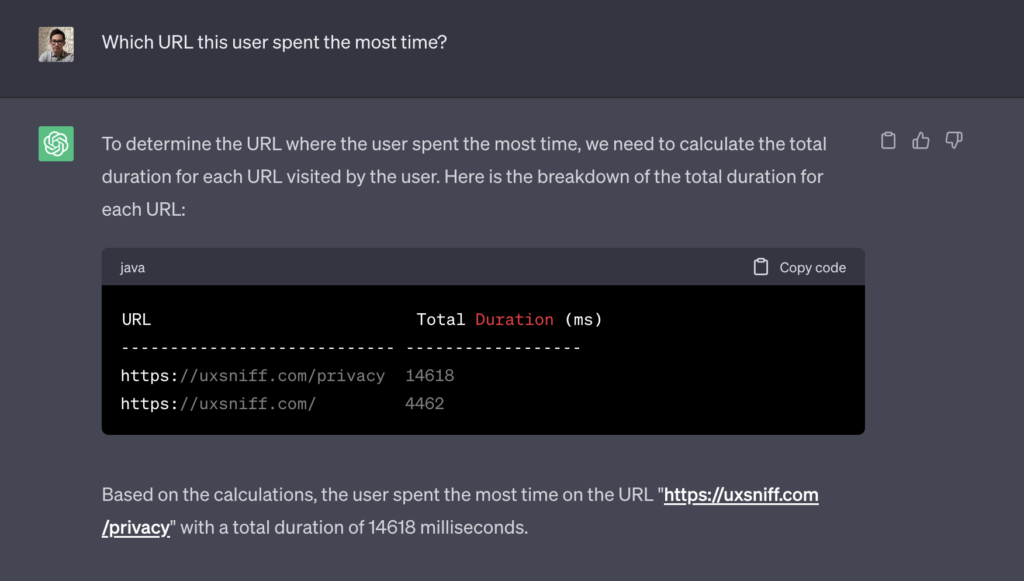

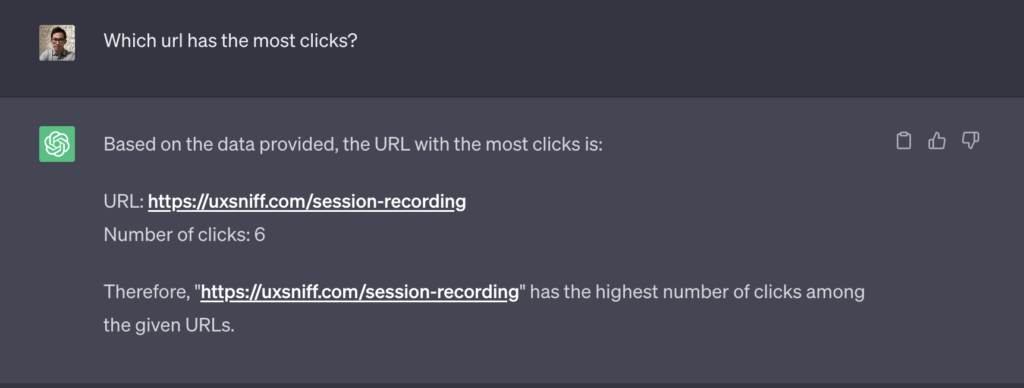

When I asked GPT a question using the column headers “URL” and “clicks” specifically, GPT was able to understand the query correctly and provided the accurate answer. It’s impressive to see how GPT can interpret and respond to queries based on the given column headers.

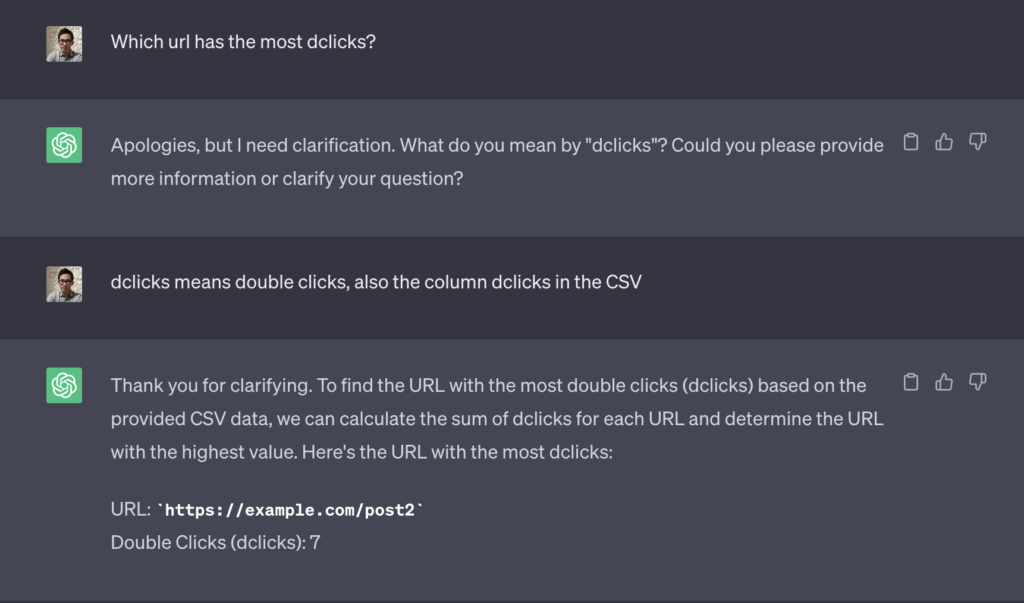

Indeed, when asking GPT a question using a column name like “dclicks” that may not be immediately meaningful to humans, it is necessary to provide GPT with an explanation or definition of what “dclicks” represents. GPT relies on the information and context it is given, so clarifying the meaning of unfamiliar column headers can help GPT understand and respond accurately to queries.

Clarify column names that are not meaningful by its name

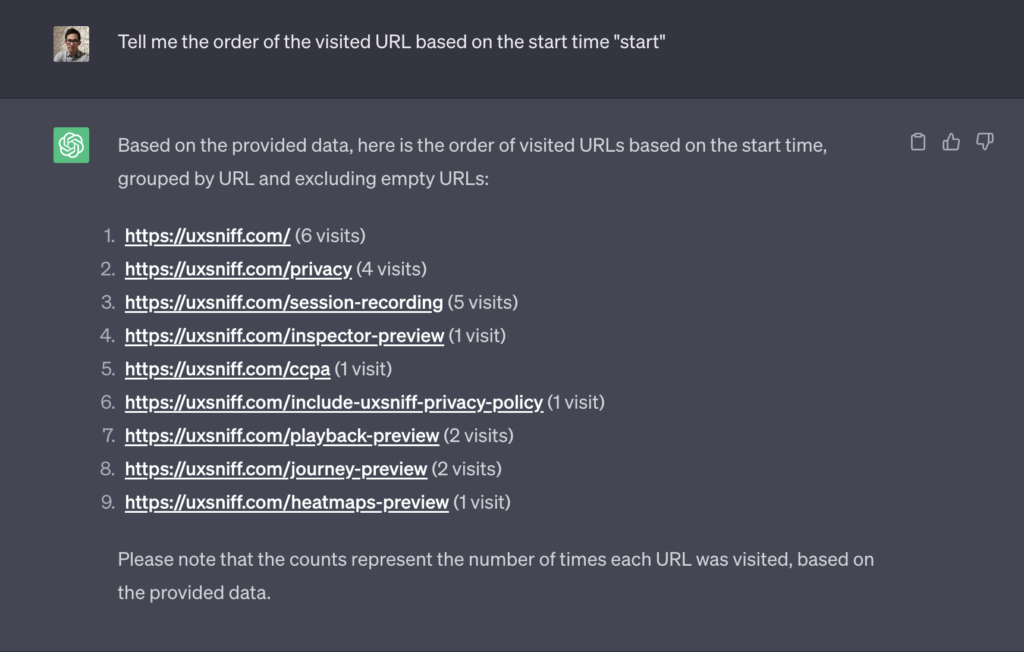

When querying the order of visited URLs based on the start time, it is important to specify that the column labeled “start” corresponds to the start time. By providing this clarification to GPT, it can understand the context of the query and accurately determine the order of visited URLs based on the specified start time column. Clarifying the meaning of column headers helps GPT interpret the data correctly and provide accurate responses.

Conclusion

The way we analyze user behavior is evolving rapidly. What started as manual video review and experimental AI-assisted data analysis has moved toward more structured, machine-readable approaches. Even as AI becomes capable of understanding richer media formats, the quality of insights still depends heavily on how the data is captured.

By storing session recordings as structured DOM mutations rather than traditional video files, UXsniff enables AI to understand user intent with greater precision and consistency. This approach reduces ambiguity, avoids the overhead of visual decoding, and allows meaningful UX insights to be generated at scale. As AI capabilities continue to advance, structured interaction data remains the most reliable foundation for understanding how users truly experience a product.